Creating Depth, Part 1: Introduction, DOF, Deep Staging, Resolution

Depth perception is a basic ability of human vision. It is through depth that we judge distances and spatial relations. But depth is inherently a three-dimensional concept. So capturing the three-dimensional world as a two-dimensional image presents challenges when striving to preserve depth. These challenges are mostly related to the fact that, unlike the real world, two-dimensional images lack stereo cues, and stereo vision is a major component of the mechanics of depth perception. This is one limitation that 3D cinema tries to overcome. This article is about 2D images though, and the ways to exploit stereo unrelated (monocular) cues to suggest depth.

Why depth is important for a 2D image?

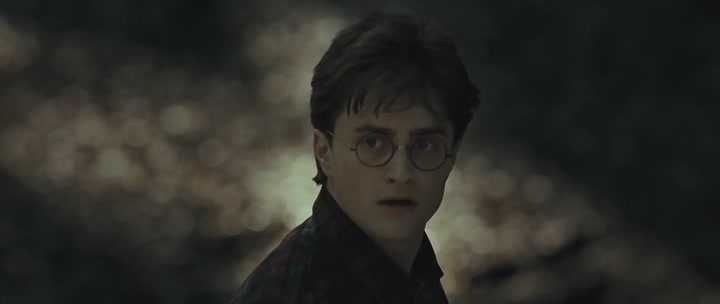

Exteriors naturally lend themselves to deep images. There are at least 4 significant distance planes here.

Depth is not a universal quality to look for in an image, but most non-abstract images benefit from an enhanced depth illusion. After all, an image renders a reality (existent or imagined), and reality is 3D. A cinematic 2D representation should appear sufficiently three-dimensional. Depth defines space. Injecting depth into an image furthers the spatial awareness of the viewer and helps orient them into the depicted world. Ideally, the objects in the frame should appear recessing behind the screen.

This is depth’s main function in a cinematic image, but not the only one. There are often purely pictorial benefits. Multiple depth planes create visual interest and stimulate the eye to wander and explore the frame. This may or may not be desirable, depending on content and intent. For example, an extreme close-up will usually gain nothing from distractions and complexity. And sometimes an image needs to be unclear, claustrophobic or to imply a confined space. Depth, on the other hand, both opens space and gives scope.

Without the ability to triangulate distances through stereo (binocular) vision, spatial perception is drawing on experience about spatial relations between objects. The brain needs other cues to perceive depth. These are usually about space differentiation and, in one way or another, lead to the brain separating objects, figuring a space between them, and identifying multiple depth planes in the frame. So, helping the separation of scene elements and enhancing the sense of distance between planes in the scene promotes the illusion of depth. The cinematographer manages depth through viewpoint choice and movement, composition and blocking, and lighting. But there are other tricks that can help, and we will also talk about some of them.

In painting they often differentiate foreground, middle-ground and background. There can be more discernable distance planes though. But, in general, we can assume that at least two discernable distance planes are necessary for reasonable space definition in a frame.

Depth of field

One misguided “truth” you can often find on the internet is that full frame cameras render images with more depth due to their ability to create shallower apparent depth of field (compared to Super35/APS-C). This is wrong on many levels, starting with pure semantics: shallow focus and depth don’t really belong to the same sentence. Shallow focus is not the same as separation. The advantage of larger sensors is better pop and delineation (see the last section below).

Showing the relations between objects in the scene requires sufficient depth of field to render these objects recognizable. But slight defocusing, with objects getting smoothly and slowly out of focus with increasing distance from the focus point, can enhance the illusion of depth. Two reasons for this. First, slight defocus mimics the workings of the eye (especially in dim environments), creating a natural image. Second, it simulates one of the cues of depth perception: texture gradient. The eye sees nearby objects in fine detail, and objects in the distance appear less detailed. While this is usually related to linear perspective, a similar effect happens with objects slowly falling out of focus. Artists sometimes emulate the slight defocus of the eye by painting only the main subject in fine detail.

Bokeh brightness variation creates a nice frame, but calling the background a "depth plane" would be taking "plane" a bit literal.

Very shallow focus is a viable cinematic device for some purposes, but it will never render a space with sufficient depth. Anything in front and behind the focus point becomes an impressionistic blur, isolating the focused object. Deep focus is often considered yielding flatness due to every element in the scene being equally sharp. But equal sharpness is far less objectionable (if at all) than very shallow focus when depth is concerned. It can even lend pictorial qualities to an image. And while there is no way to inject depth in a very shallow focus picture, there are approaches to separate depth planes in a deep focus image.

Deep staging

Deep staging (or deep space) refers to a specific approach to blocking action and camera. Important elements of the scene are placed on different depth planes. This creates natural distance points for the eye to wander to. Deep staging is often used with long takes, sometimes including dolly, steadicam or handheld camera moves. It is emphasized by actors entering the frame, thus creating an additional plane of interest; actors leaving the frame, shifting interest to another plane; or actors moving from one plane to another, creating depth vectors in the frame. A variant of the last is the so-called “walking into a close-up” shot, used by Hitchcock, John Ford, Kalatozov and others. This technique has the actor(s) moving from a deeper plane to the foreground, ending in a medium (or a tighter) close-up.

Managing depth planes: First Bernstein (right) lowering the paper introduces Thatcher (left), and creates a new plane; then Kane moving through depth planes establishes the full scope of the shot. Note how the true size of the room and the windows is only revealed after Kane stands in the deep background.

Deep focus and deep staging complement each other beautifully. But modern trends usually combine deep staging with selective focus and focus racking between planes to guide the attention of the viewer according filmmaker’s intent. There is also a tendency to rely on coverage and to defer sequence construction to editing. Coverage also relies more on close-ups, over-the-shoulder shots and other standard frames. All this doesn’t play well with imaginative deep staging and deep focus. Neither does the shift towards less lighting. Deep focus and long takes require both pre-shooting commitment and relatively small apertures (and more light). This makes staging deep interiors a challenge.

Films by the duo Kalatozov/Urusevsky often feature deeply staged shots with pronounced foregrounds and expressionistic camera angles.

Deep staging and deep focus were used extensively by some of the greats: Orson Welles, Roman Polanski, Kurosawa, Mizoguchi, among others. Citizen Kane is the textbook example. Orson Welles and cinematographer Gregg Toland used torrents of light, a wide lens (24 mm), small apertures (f8 to f16) and split focus to render the startlingly deep interiors. 24 mm may sound tame by today’s standards: the film being shot in the Academy format, 24 mm is a humble 39 mm full frame equivalent (in horizontal FOV). But in that time it was considered unforgiving to actors and used sparingly. And certainly not with actors in the foreground. 50 mm was used universally for interiors, and f4 or larger apertures were the norm. Welles and Toland coupled their choice of optics with genuinely deep staging for maximum effect, which infused drama in their images.

Left: a classic three person / three planes arrangement with a strong foreground. Middle: Another beautifully crafted three planes shot, with the figure in the deep background giving scope to the set. Right: An example of split focus.

Sharpness and 3D pop

Before moving on to more interesting stuff, lets touch on a minor point. The quality of the lens and the resolution of the medium have impact on textures and texture gradient. An image lacking clarity at its focus point will appear flatter than a crisper image. And when coupled with slight focus fall-off, the crisp image will demonstrate a more readily noticeable texture gradient.

Bottom image is a softened version of the original (top), approximating a softer lens. Note the weaker delineation of the subject and the softer textures, resulting in a subtly flatter image. (click to enlarge)

A more obscure quality related to resolution and optics is the so-called 3D pop. The subject in focus in some pictures appears to pop out of the surroundings, and out of the image. Photographers sometimes call this Zeiss pop or Leica pop, depending on their affinities, because it tends to show in images shot with some Zeiss and Leica lenses. Pop is often mystified and its origins seemingly difficult to pinpoint. The most important element is delineation of the subject in focus. This needs great microcontrast (MTF result) in the spatial frequencies that contribute to the required resolution, preferably across the whole image field. Good MTF results at higher spatial frequencies may look nice in a MTF chart but are at best irrelevant, and at worst – creating aliasing. For example, a FullHD full frame image will only benefit from spatial frequencies up to around 15 lp/mm. For the same pixel resolution, larger sensors have an advantage: they need this great MTF at lower frequencies, compared to smaller sensors (which is easier to achieve). Minimized lens aberrations help for clean edges. And the edges obviously need to be in sharp focus. Again, a bit of focus fall-off towards the background helps, but heavily blurred backgrounds will make the subject look like a cut-out. Some decent subject-background contrast (through light and/or color) also contributes.

Pop’s connection to depth lies in interposition. Interposition is one of the basic depth cues. When an object occludes another object, the first object is apparently in front of the other, and closer to the observer. Popping (clearly delineating) the front object is one way to separate it from what lies behind. If the objects blend into each other, they may be perceived as a single entity.

Sharpness and pop are influenced (in a bad way) by lossy image compression. They will often get lost in heavily compressed video. Motion blur also obliterates them, which renders them pretty much irrelevant in scenes with motion and more applicable to still images.

Part 2 of the Creating Depth series is on depth and perspective.