Shooting 4K Video for 2K Delivery: the Bit Depth Advantage

The first cheapish 4K cameras are here. Panasonic GH4 is out and Sony A7S is on the horizon. But nobody needs 4K, right? There is still a lot to be had from shooting in 4K though. Shooting 4K for 2K/1080p delivery has a couple of advantages. First, you generally get sharper images after the downscale from 4K to 2K in post compared to shooting 2K in-camera. Second, there is increased color precision in the downscaled image. You get 10-bit 2K video from 8-bit 4K video, or 12-bit 2K video from 10-bit 4K video. This advantage is sometimes misunderstood, some people go as far as saying there isn’t any (spoiler: they are wrong). So here is an explanation with examples.

4K video for 2K delivery: the theory

Image and video processing software works in high bit depth in order to minimize precision errors introduced in the processing stages. A working precision of 16 bits or 32 bits is common. When a lower precision image is imported, the values are scaled up to fit in the higher working precision. For example, if we scale an 8-bit image to a 10-bit working precision all values will be multiplied by 4. That is, 1 becomes 4; 2 becomes 8; 3 becomes 12; 100 becomes 400; etc. Note the gaps between successive values. We really only have 8 meaningful bits, no matter that the working precision is 10 bits.

This higher working bit depth is fundamental in getting increased precision when downscaling video. The process won’t work if the working bit depth is the same as the source video bit depth.

To get our point through let’s start with a simple case: a grayscale image. Suppose we have a camera that records both 4K and 2K greyscale images in 8 bits. Also, in order to keep numbers small enough, suppose we are importing in a processing software working with 10-bit precision.

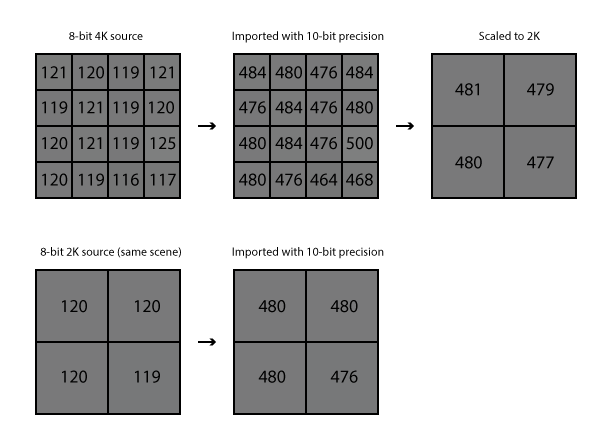

The following diagram shows what happens with both images after import. Image processors may use a whole bunch of sophisticated downscaling methods, but for simplicity let’s assume an algorithm that takes four neighboring pixels and outputs a single averaged pixel in their place.

Importing a 4K 8-bit image into 10-bit working precision and downscaling to 2K effectively utilizes the whole 10-bit coding space. A 4x4 pixel block from the 4K image (above) compared to the corresponding 2x2 pixel block from the same scene shot in-camera in 2K (below).

It should now be apparent where the increased tonal precision comes from. After import and conversion to 10-bit, the 2K source image will only have values multiple of 4 (that is, still only 8 meaningful bits). On the other hand, the 4K-to-2K image will utilize the whole 10-bit coding space.

In color images there are 3 channels but the principle remains the same. The discussion above applies per channel. Things get more interesting when chroma subsampling comes into play. I won’t be going into details on what chroma subsampling is. Here is the wikipedia article, just in case.

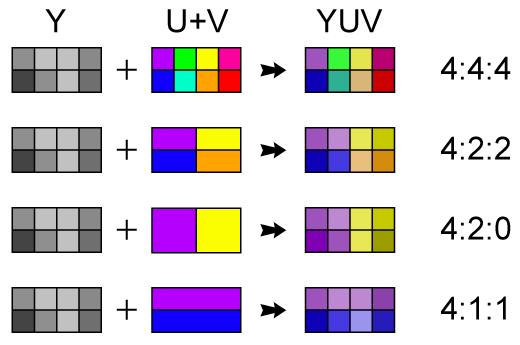

Chroma subsampling ratios 4:2:0 and 4:2:2 are often used in video. 4:4:4 means no subsampling. (image from Wikimedia)

The image above makes it clear that a block of 2×2 neighbor pixels contains 1 true chroma sample per chroma channel in 4:2:0 subsampled video and 2 true chroma samples per chroma channel in 4:2:2 subsampled video. This means that downscaling 4:2:0 8-bit video from 4K to 2K will result in 4:4:4 video (no chroma subsampling) with 10-bit luma and 8-bit chroma channels, because downscaled luma averages 4 samples while downscaled chroma only uses 1 sample. And downscaling 4:2:2 8-bit video from 4K to 2K will result in 4:4:4 video (no chroma subsampling) with 10-bit luma and 9-bit chroma channels: downscaled chroma averages two samples in this case.

There are a couple of important points to consider here:

- Pretty much all sensors used in video are Bayer sensors. Each raw pixel is only sensitive to one of the colors red, green and blue. So a 2×2 block of pixels has two green, one blue and one red pixel. This means that for each pixel of 4K debayered video coming from 4K sensors two of the three color channels are interpolated from the pixels around it. Technically this puts a limit on the chroma precision gain from downscaling (this is less of a concern for chroma subsampled video though).

- Compression should also be taken into account. Everything above describes an ideal case with no lossy compression whatsoever. Lossy compression tends to discard high frequency detail and essentially smoothens the values of neighboring pixels. This lessens the precision gains on downscale, but does not cancel them, as can be seen in the examples below. In any case, the heavier the compression, the smaller the actual precision gain from downscaling.

4K video for 2K delivery: a synthetic example

All this is theory. To check it in real world conditions I did a synthetic test. I used some FullHD video and downscaled it to 960×540. The simulation below was created following these steps in Davinci Resolve 10 (Resolve works in 32 bits internally):

1) Full color (4:4:4) high bitdetph (14-bit) uncompressed 1080p video imported in Davinci Resolve. Source footage coming from well exposed Canon Magic Lantern raw images.

2) Fullsize video exported with the 175mbps 8-bit 4:2:2 DNxHD intraframe codec (23.976fps). This is our stand-in for 4K 8-bit 4:2:2 video.

3) Halfsize video exported with the 175mbps 8-bit 4:2:2 DNxHD intraframe codec (as 960×540 centered image with big black borders in 1080p video). This is our stand-in for 2K 8-bit 4:2:2 video.

4) Both fullsize and halfsize clips imported back in Resolve on a 960×540 timeline. The fullsize video was downscaled to timeline resolution with a Smoother scaling filter, thus creating our “4K-to-2K” 10-bit footage. The halfsize video was imported without scaling (1:1 center crop from the 1080p frame).

5) Equal gamma lift was applied to both clips to raise the brightness a bit and stress the image, then a bit of contrast added for better legibility of the damage.

6) The same frame exported (uncompressed) from both clips.

Here are a couple of crops for comparison. Blacks are the most sensitive part of the range due to their lack of tonal precision, so lifting stresses them most. That’s also the range which gains most precision in practice from the downscaling shenanigans.

The downscaled for increased precision images are on the right. Images blown to 200% for better legibility. 8-bit PNG file, so no further image compression at play here.

These demonstrate the superiority of the “4K-to-2K” video. It handles the processing significantly better. Also note the difference in sharpness. This example uses DNxHD 175mbps 8-bit 4:2:2. Other codecs usually used for compressing HDMI feeds may or may not handle the source signal more gracefully.

So how does all this apply to real world cameras?

On the Panasonic GH4 you can record 8-bit 4:2:0 4K video internally (heavily compressed at 100mbps) and 10-bit 4:2:2 4K video externally. The first will give you 4:4:4 2K video with 10-bit luma and 8-bit chroma. The second will give you 4:4:4 2K video with 12-bit luma and 11-bit chroma. On the Sony A7S you can record 8-bit 4:2:2 4K video externally. This will give you 4:4:4 2K video with 10-bit luma and 9-bit chroma. In both cases you need a 4K external recorder like the Atomos Shogun or Convergent Design Odyssey 7Q.

Wow, that was pretty mindblowing. I knew that downscaling produced better video than shot at same resolution, but now I know why.

It is pretty ridiculous that considering all that debayering, color discarding and compression shenanigans, you have to shoot at 2,5K or 4K to get “true” 1080p in the end via downscaling.